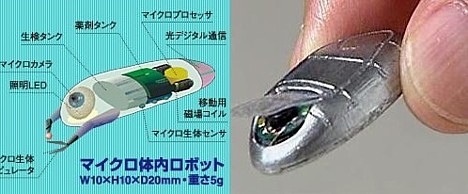

On February 26, researchers from Ritsumeikan University and the Shiga University of Medical Science completed work on a miniature robot prototype that, once inserted into the body through an incision, can be freely controlled to perform medical treatment and capture images of affected areas. The plastic-encased minibot, which measures 2 cm (0.8 inch) in length and 1 cm (0.4 inch) in diameter, can be maneuvered through the body by controlling an external magnetic field applied near the patient.

While other types of miniature swallowable robots have been developed in the past, their role has mostly been limited to capturing images inside the body. According to Ritsumeikan University professor Masaaki Makikawa, this new prototype robot has the ability to perform treatment inside the body, eliminating the need for surgery in some cases.

The researchers developed five different kinds of prototypes with features such as image capture functions, medicine delivery systems, and tiny forceps for taking tissue samples. MRI images of the patient taken in advance serve as a map for navigating the minibot, which is said to have performed swimmingly in tests on animals. Sensor and image data is relayed back to a computer via an attached 2-mm diameter cable, which looks like it can also serve as a safety line in case the minibot gets lost or stranded.

[Source: Chugoku Shimbun]

Hitachi has successfully tested a brain-machine interface that allows users to turn power switches on and off with their mind. Relying on

Hitachi has successfully tested a brain-machine interface that allows users to turn power switches on and off with their mind. Relying on

A

A

On June 20, an Okayama University team of researchers led by Professor Shogo Minagi unveiled a nasal airflow regulator designed to alleviate voice loss such as that which sometimes occurs after a stroke.

On June 20, an Okayama University team of researchers led by Professor Shogo Minagi unveiled a nasal airflow regulator designed to alleviate voice loss such as that which sometimes occurs after a stroke.