U-Tsu-Shi-O-Mi is an interactive "mixed reality" humanoid robot that appears as a computer-animated character when viewed through a special head-mounted display. A virtual 3D avatar that moves in sync with the robot's actions is mapped onto the machine's green cloth skin (the skin functions as a green screen), and the sensor-equipped head-mounted display tracks the angle and position of the viewer's head and constantly adjusts the angle at which the avatar is displayed. The result is an interactive virtual 3D character with a physical body that the viewer can literally reach out and touch.

Researcher Michihiko Shoji, formerly of NTT DoCoMo, helped create U-Tsu-Shi-O-Mi as a tool for enhancing virtual reality simulations. He is now employed at the Yokohama National University Venture Business Laboratory, where he continues to work on improving the virtual humanoid. The system, which currently requires a lot of bulky and expensive equipment to run, will likely see its first real-world applications in arcade-style video games. However, Shoji also sees a potential market for personal virtual humanoids, and is looking at ways to reduce the size and cost to make it suitable for general household use.

Here is a video of U-Tsu-Shi-O-Mi.

The virtual humanoid will be on display at ASIAGRAPH 2007 in Akihabara (Tokyo) from October 12 to 14.

[Source: Robot Watch]

A vending machine at Tokyo's Akihabara station is now offering a limited run of canned bishoujo bread in celebration of the new Clannad

A vending machine at Tokyo's Akihabara station is now offering a limited run of canned bishoujo bread in celebration of the new Clannad

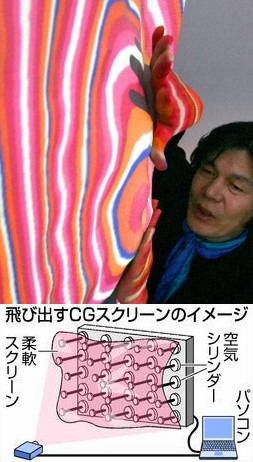

Here's a groovy display for people looking to add that extra dimension to their viewing material...

Here's a groovy display for people looking to add that extra dimension to their viewing material...